Data is often referred to as the new oil, a phrase that has become increasingly popular in the world of technology and business. However, just like crude oil, raw data in its original form is not immediately useful or valuable. It needs to be refined, processed, and transformed before it can drive insights, power analytics, or inform business decisions. This is where ETL (Extract, Transform, Load) pipelines come into play. ETL pipelines are the backbone of modern data engineering, enabling organizations to move data from various sources, clean and transform it, and load it into systems where it can be analyzed and used effectively.

Today, I’m excited to introduce my open-source project: end_to_end_ETL. This repository is designed to be a practical, beginner-friendly resource that demonstrates how to build a robust ETL pipeline from scratch using Python. Whether you are new to data engineering or looking to solidify your understanding of ETL concepts, this project provides a comprehensive, hands-on guide to building pipelines that are both scalable and maintainable.

Why ETL?

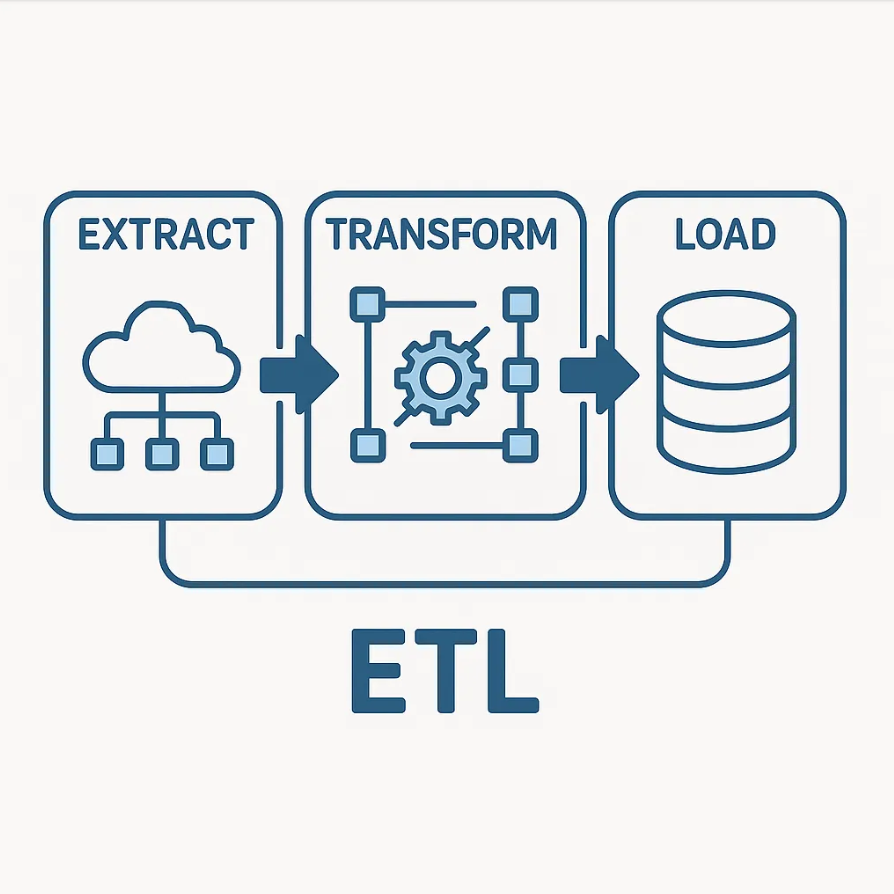

ETL is a cornerstone of modern data engineering. It’s the process of:

- Extracting raw data from various sources,

- Transforming it into a clean, usable format,

- Loading it into a data warehouse or database for analysis.

This workflow is essential for anyone working with data, whether you’re a data scientist, analyst, or engineer

Project Overview

The end_to_end_ETL project is designed to be simple yet comprehensive. Here’s what you’ll find inside:

- Extraction: Scripts to fetch data from different sources (APIs, CSVs, databases, etc.).

- Transformation: Data cleaning, normalization, and enrichment steps using Python’s powerful libraries.

- Loading: Efficiently storing the processed data into a target database or file system.

The code is modular, well-documented, and easy to extend for your own use cases.

Key Features

- Pythonic Implementation: The project leverages popular Python libraries like pandas for data wrangling, SQLAlchemy for database interactions, and logging for robust monitoring and debugging.

- Configurable: The pipeline is designed to be easily adaptable to new data sources or destinations. Configuration files allow you to specify source and target details without changing the core code.

- Flexible Storage: While the default storage is a local PostgreSQL instance, the pipeline is built to support other databases such as MySQL, SQLite, or even cloud-based solutions like Amazon Redshift or Google BigQuery. Just update your connection settings and adapt the loading logic to your preferred database system.

- End-to-End Example: The repository includes a complete example that takes you from raw data extraction to analytics-ready tables, demonstrating the full journey and highlighting best practices along the way.

Orchestrating and Automating with Airflow

One of the most powerful aspects of this project is its ability to be automated and orchestrated using Apache Airflow. Airflow is an open-source platform designed to programmatically author, schedule, and monitor workflows. By integrating your ETL scripts with Airflow, you can:

- Schedule your ETL jobs to run at regular intervals (hourly, daily, weekly, etc.), ensuring your data is always up-to-date.

- Monitor and retry failed tasks automatically, reducing manual intervention and improving reliability.

- Visualize your workflow and track data lineage, making it easier to understand dependencies and troubleshoot issues.

To get started with Airflow, you can wrap each stage of your ETL (extract, transform, load) as separate Airflow tasks and define their dependencies in a DAG (Directed Acyclic Graph). This approach makes your pipeline robust, maintainable, and production-ready. The project includes example DAGs and instructions for integrating your ETL scripts with Airflow, so you can hit the ground running.

Who Is This For?

This project is designed for a wide range of users:

- Beginners looking to understand ETL concepts with hands-on code.

- Data professionals who want a template for their own projects.

- Anyone interested in automating data workflows

Whether you are a student, a data analyst transitioning into engineering, or a seasoned professional looking to streamline your workflow, this project provides valuable insights and practical tools.

How to Get Started

- Clone the repo:

git clone https://github.com/ayushupadhyay22/end_to_end_ETL.git2. Install dependencies:

pip install -r requirements.txt3. Configure your environment

Update the configuration files to specify your data sources, target databases, and any transformation rules specific to your use case.

4. Check README

The repository includes a detailed README with step-by-step instructions, customization tips, and troubleshooting advice.

Best Practices and Tips

Building an ETL pipeline is not just about moving data from point A to point B. It’s about ensuring data quality, reliability, and scalability. Here are some best practices to keep in mind as you work with this project or build your own pipelines:

- Modularize your code: Keep extraction, transformation, and loading logic in separate modules. This makes your pipeline easier to maintain and extend.

- Use configuration files: Avoid hardcoding source and target details. Use YAML or JSON configuration files to make your pipeline flexible and adaptable.

- Implement logging and monitoring: Use Python’s logging library to track pipeline execution, errors, and performance metrics. This will help you troubleshoot issues and optimize your workflow.

- Test your pipeline: Write unit tests for each stage of your pipeline to catch errors early and ensure reliability.

- Document your code: Clear documentation makes it easier for others (and your future self) to understand and maintain the pipeline.

Final Thoughts

Whether you’re just starting your data journey or looking to streamline your workflows, the end_to_end_ETL project is a great place to learn and experiment. By following the examples and best practices provided, you’ll gain a solid understanding of how to build ETL pipelines that are robust, scalable, and production-ready. Check it out on GitHub, give it a ⭐ if you find it useful, and let’s keep building awesome data tools together!

Happy coding, and may your data always be clean, consistent, and ready for analysis!