What makes one AI feel magical while another falls flat? I believe the difference is rarely a bigger model or a cleverer trick — it’s the context wrapped around the model’s decisions. That’s why context engineering has become such a critical discipline. It’s about shaping the information, instructions, and tools an AI sees so it behaves intelligently in the moment. In this piece, I’ll unpack what context engineering means, where it came from, why it matters across design, training, and deployment, how it succeeds (and fails) in the real world, the main techniques engineers use, and the road ahead — including the ethical questions we can’t ignore.

What Is Context Engineering? (Definition and Evolution)

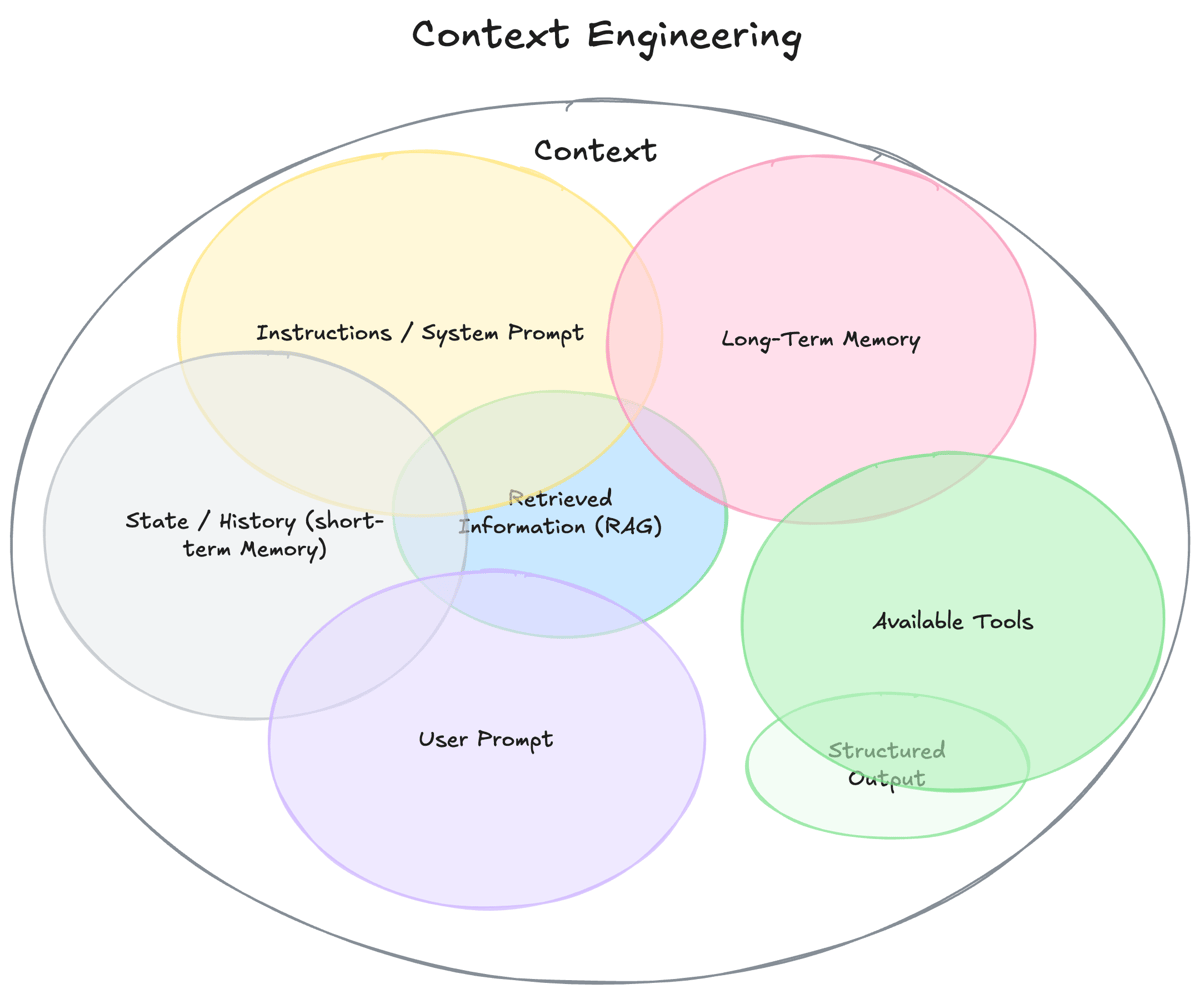

When I say context engineering, I’m talking about making AI systems aware of the situation they operate in by giving them the right inputs at the right time. I understand Philipp Schmid frames it neatly as the practice of designing dynamic systems that deliver the necessary information and tools — formatted appropriately — so a language model has everything it needs to complete a task. While that definition lands squarely in the era of LLMs, the idea has older roots.

In the 1990s, researchers like Bill Schilit helped popularize context-aware computing — software that adapts to factors like location, nearby devices, or time. Anind Dey’s classic definition (2001) described context as any information that characterizes the situation of an entity in an interaction. Early systems used simple cues: a phone app might switch behavior based on GPS or the time of day. Useful — yet mostly rule-based and narrow.

Fast forward to the 2010s and 2020s, and I’ve seen context go from peripheral to central. Instead of reacting to a single sensor, models learn from rich, structured context. Transformers explicitly encode relationships among tokens; recommendation systems factor in time, place, and past behavior to tailor suggestions. Today’s context engineering goes further: it doesn’t just “have” context — it engineers the system to collect, understand, and apply it. With LLM agents, that means building pipelines that fetch knowledge, maintain memory, and invoke tools — moving beyond prompt wording to architecting the full stage on which the model performs.

In short, we’ve evolved from context-aware apps (reacting to environments) to context engineering as a strategy: ensuring an intelligent system always has the pertinent knowledge, history, and situation to make the best call. I believe the tidy rule of thumb is this: an AI is only as smart as the context you give it.

Why Context Matters in Modern AI Systems

I’ve noticed that model performance often hinges less on raw parameter count and more on whether the system is fed the right context — when it needs it and how it’s structured.

Design (System Architecture). Modern systems look more like orchestrated agents than single-shot models. Imagine a travel assistant: before it asks an LLM to answer, it may need flight prices, your calendar, weather, and a booking API. That demands a retrieval layer for knowledge, a memory store for history, and a suite of callable tools. Good context engineering at design time is deciding which inputs and tools exist, how they’re formatted, and who owns the assembly process. Without this scaffolding, even a state-of-the-art model can stumble.

Training (Data and Models). I believe giving models context during training pays off repeatedly. Researchers have shown you can decompose signals into context-free and context-sensitive parts and blend them, helping models learn when to rely on general knowledge versus situational cues. In practice, that looks like contextual embeddings, metadata tags, and architectures that explicitly represent context. The result is often better performance and more interpretable behavior.

Deployment (Inference & Interaction). Once live, an AI faces a messy world. This is where real-time context integration matters most: remembering the last user action, reading sentiment, ingesting sensor telemetry, adjusting for weather or traffic. Mature pipelines manage tool use, switch strategies based on context, and keep a tight rein on what flows into the model. From what I’ve seen, many agent failures are not model failures — they’re context failures. Own the context, and you avoid a lot of pain.

Bottom line: without context, models err or feel bland; with it, they can seem remarkably capable and reliable.

Successes and Failures of Context-Aware AI

When teams get context right, the wins feel effortless. When they don’t, the failures can be memorable.

Personalized Recommendations. Services like Netflix, Amazon, and Spotify thrive on context: time of day, device, past behavior, even implied mood. I’ve seen how a Friday-night playlist versus a Sunday-morning one makes perfect sense when you respect shifting context. Engagement follows.

Smart Assistants and Everyday Apps. Assistants that nudge you to leave early because traffic is heavy are stitching together calendar, location, and real-time conditions. Navigation apps reroute based on congestion and user habits. Smart homes adapt lighting and temperature to presence and time. These micro-adaptations add up to experiences that feel helpful rather than mechanical.

Misunderstanding User Intent. Consider a frustrated message to a support bot: “I want to cancel everything.” Absent context, an agent might literally cancel every service — clearly not what the user meant. A context-aware system reads sentiment, checks history, and asks a clarifying question. I believe this is the difference between a costly incident and a calm save.

Microsoft’s Tay (2016). Tay’s meltdown wasn’t a neural architecture failure — it was a context failure. Exposed to toxic input without guardrails, it mirrored the worst of what it saw. Today’s systems go live with carefully engineered context: behavioral instructions, filters, and feedback loops designed to prevent “garbage in, garbage out.”

The lesson is consistent: successful systems deliberately engineer context; failures neglect it.

Techniques and Frameworks for Context Modeling and Engineering

There’s no single recipe, but a handful of patterns show up again and again.

Embeddings and Retrieval-Augmented Generation (RAG). Encode content and queries into vectors, retrieve semantically relevant snippets, and feed them into the model at runtime. I understand why this approach is everywhere: it lets an LLM “look up” fresh knowledge instead of hallucinating. With a well-curated vector store, the model answers as if it “knows” recent or niche facts — because it’s pulling them in on demand.

Metadata and Contextual Features. Sometimes the cleanest move is to pass context as explicit features: timestamps, geolocation, device type, session IDs, urgency scores. Think of it as classic feature engineering, but for situation. I’ve seen this make fraud models saner and help conversational systems separate one user’s history from another’s.

Simulated Environments and Domain Randomization. For robotics and autonomy, much of the relevant context is physical and dynamic. Simulators let you vary lighting, textures, physics, and rare events on purpose, so the real world looks like “just another variation.” This narrows the sim-to-real gap and lets you rehearse edge cases safely.

Contextual Adaptation in RL and Meta-Learning. Approaches like CAVIA partition parameters into shared weights and fast-adapting context variables. In effect, the agent carries a compact “task embedding” it can quickly tune for new dynamics or reward functions. I believe this gives you the best of both worlds: general knowledge plus rapid, context-specific adaptation. Contextual bandits echo the idea on the decision-making side: choose actions using side information, not in a vacuum.

In practice, teams mix and match: retrieval for knowledge, metadata for situational cues, simulators for robustness, and adaptive policies for control — often orchestrated with frameworks like LangChain or LlamaIndex that help assemble the pieces.

Future Directions and Ethical Considerations

As models grow more context-savvy, we gain power — and responsibility.

Where We’re Headed

- Bigger Context Windows and Better Memory. I believe we’ll see models comfortably handle long histories — entire project threads, multi-hour media, months of interaction — reducing the “forgetfulness” that plagues today’s systems.

- Multimodal Context and World Models. Text is just one slice. Vision, audio, and sensor streams will shape richer internal world models — a robot maintaining a live map of a home, an assistant tracking commitments across documents and voice notes.

- Auto-Contextualization. Agents will get better at deciding what they need to know, then fetching it safely: “I’m unsure — let me check X,” followed by a targeted retrieval or tool call.

- Personalization vs. Generalization. Expect clear layers separating global knowledge from personal context, with user-owned long-term memory and deliberate sandboxing to prevent leakage.

The Hard Questions We Must Answer

- Privacy. Context is often personal. I believe we have to minimize what we collect, keep it secure, and be transparent about use. On-device processing and consentful design matter, as does guarding against unintended inferences from “innocent” signals.

- Bias and Fairness. Context can correct bias — or entrench it. Using the right context (e.g., financial history) while excluding protected attributes is a nuanced engineering and policy problem. Auditability is non-negotiable.

- Misinterpretation and Errors. If the context is wrong, the outcome is wrong — sometimes dangerously so. Build validation checks, fallbacks, and clarification prompts into the pipeline.

- Accountability and Transparency. Context-rich decisions must be explainable. That likely means context logs: what was retrieved, which tools were used, and which signals influenced the result.

- Security and Misuse. More context channels mean more attack surface. Guard against prompt injection, poisoned retrieval, and tampered sensors. Verify sources, sign data where possible, and constrain agent autonomy to safe domains.

Final Thoughts

I believe context engineering is how we move from clever demos to dependable systems. Give an AI the right situation — structured knowledge, relevant history, appropriate tools — and it behaves less like a parlor trick and more like a thoughtful collaborator. The promise is huge: assistants that truly remember, vehicles that anticipate, services that adapt gracefully. But that promise comes with design discipline and ethical guardrails.

If we approach context with care — owning the pipelines, validating the inputs, documenting the influences — we unlock AI that does the right thing at the right moment. The quest for systems that “really understand” isn’t about one magic prompt; it’s about engineering the stage so understanding can happen. I’ve seen that when teams take context seriously, everything else starts to click.