One of the most exciting aspects of attending a conference like KDD is witnessing the intersection where academia, industry, investors, and founders come together. It offers a rare glimpse into how advanced AI research is being applied to real-world challenges across sectors.

One can witness the end to end complex AI journey.

This year’s KDD conference was no different. As always it attracted attendees from a wide array of organizations, including major players in tech and AI like Google DeepMind, LinkedIn, Netflix, Meta, and Amazon.

Beyond the tech giants, it was particularly fascinating to me to see large, non-digital organizations — such as NASA, Samsung, and Boeing — using AI to solve real-world problems that impact our daily lives.

For us at Montrose Software, the most inspiring sessions were those that focused on applied AI — realistic, grounded use cases where AI applications directly solve problems in the physical world. Here’s a deeper look at some standout sessions and key themes.

AI Grounded in Real-World Applications

Siemens AG: Michael May

Siemens AG shared fascinating AI applications in manufacturing. Their internal AI Copilot system allows engineers to give natural language commands like, “Build me a product similar to such and such blueprint but with higher eco-friendliness and lower price parts”. You can notice the user can prompt both for the static designs and as well for the optimisation targets (eco-friendliness and price in this case). Their LLM was fine-tuned with historical designs and blueprints, with knowledge graphs describing everything that Siemens knows, all in order to boost productivity and creativity of engineers at Siemens, to empower them. This highlighted AI’s role as a human assistant, a tool, rather than a replacement, improving efficiency and innovation (at the same time) in production environments.

The key to success for Siemens was embedding their domain expertise and their IP as knowledge graphs into the LLMs. This produced a fascinating value that could not be, ever, achieved without the LLM+Internal Knowledge marriage.

Other areas of focus were predictive maintenance, real-time production management and tuning at scale, and human-robot collaboration.

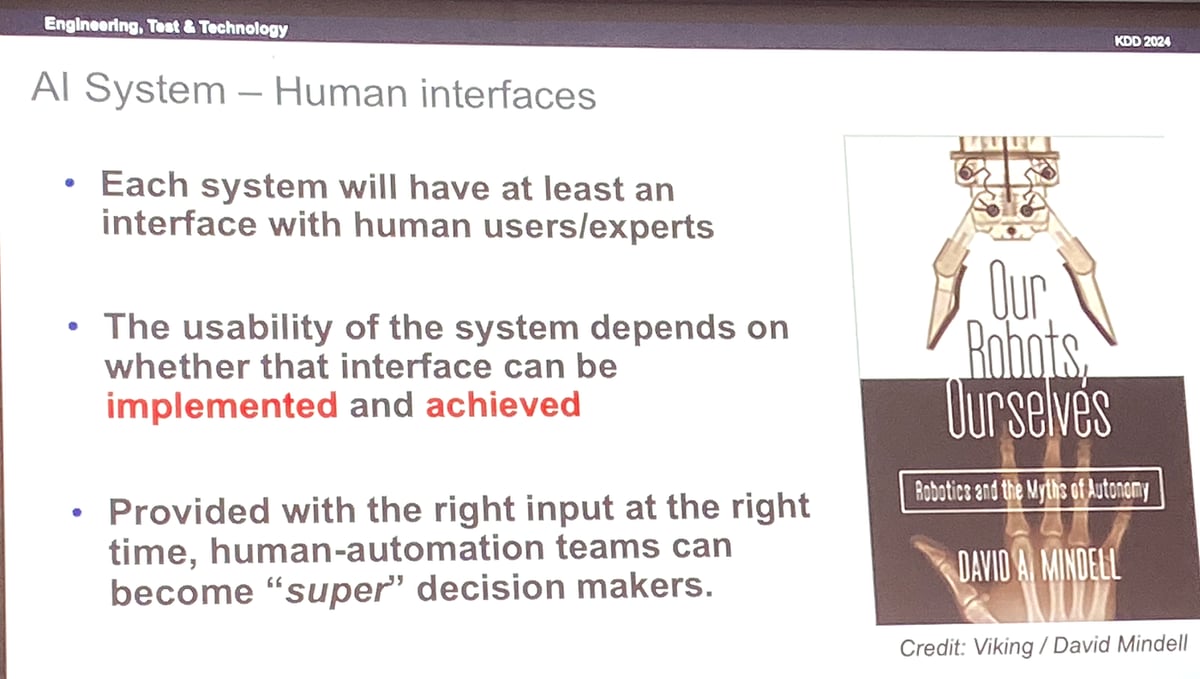

Boeing: Dragos Margineantu

Dragos indicated all the modern risks in the world of complex and critical AI solutions. Boeing brought attention to the importance of transparency in AI models used for critical tasks like flight systems and collision avoidance. Dragos Margineantu stressed that AI should not be treated as a black box solving big problems with magic. He too reminded one of the riskiest parts on the AI journey: “Almost done, just need more data” pitfall.

One of the key reinforcement from his talk was the need for decoupling the inferring processes from the decision-making processes. The latter is inherently risk-based and should involve human oversight. Boeing’s message was clear: decision-making must incorporate context and domain expertise, with AI providing not just predictions but also risk assessments and measured error rates.

On top of that, the recruiting theme was repeated yet again: building AI in the context of Domain Knowledge with human expert input and oversight is essential. An example was given: a very well performing model tasked to assist with collision avoidance was built in just a couple of weeks, it was performing very well in the laboratory conditions. Once its results were confronted with pilots the model failed to confront the reality and needed further many more months of fine tuning and testing before It could be put into practice.

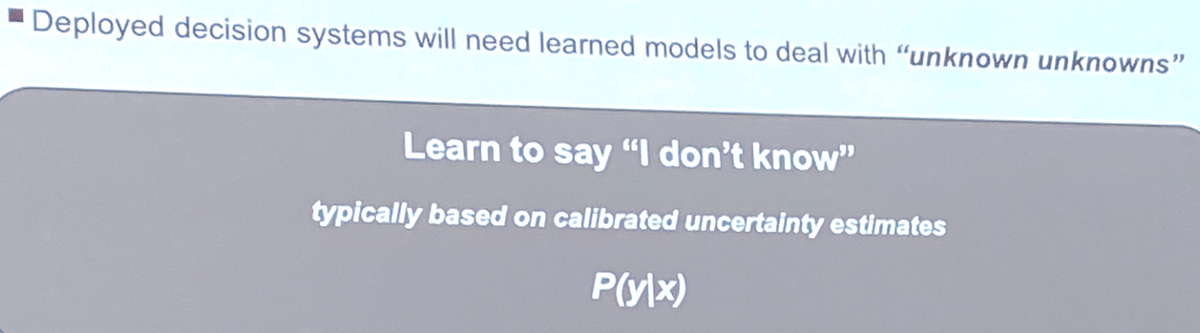

Keeping the model for too long in the lab without exposure to reality will ignore the unknown unknowns for too long postponing the most critical optimisations till late stages of the program, making the entire process costly and risky.

NASA: Nikunj C. Oza

NASA’s presentation on their Earth System Digital Twin (ESDT) offered a glimpse into large-scale AI projects designed to model complex natural systems.

The message was grounded in practicality: “I don’t care about AI unless it solves our problems and serves our mission.”

This underscored the theme that AI’s value lies in its ability to address real-world challenges, with its role in modeling Earth’s systems illustrating the practical impact of applied AI.

Imageomics || ABC Global Climate Center: Tanya Berger-WolfTanya Berger-Wolf from ABC Global Climate Center introduced a groundbreaking initiative, using AI for nature classification at scale, where human senses and sensors fall short. One notable example was identifying species that appear identical to the human eye but are different in crucial ways. AI’s ability to recognize these high resolution dimensions is instrumental in preserving biodiversity and tackling conservation challenges. Her emphasis on AI as a tool for humans — not something we should adopt and compare with — resonated deeply with me. Her message was endorsed by many other leaders present over the week, reinforcing the idea that AI must serve practical purposes and be understood from the inside out, not treated as an opaque solution to every problem.

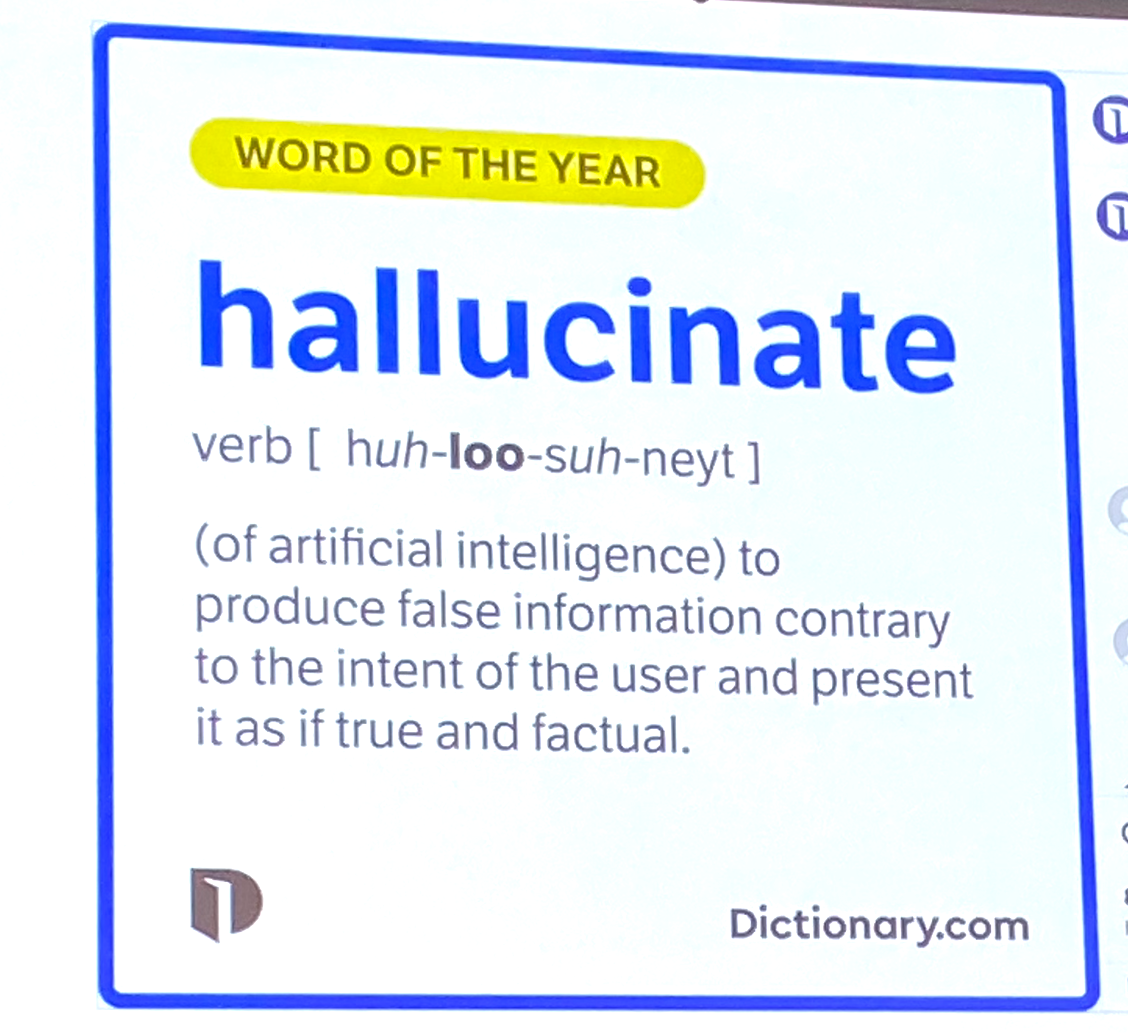

Tanya, Dragos and Luna indicated that there is not enough good data available among all the noise on the Internet. For example till recently there was no data about polar bears dying out or no information about planes backlog on the airports. This stressed the importance of healthy skepticism and limited trust in LLMs and the need for grounded in reality metrics and benchmarks empowering data driven decision making and prioritization.

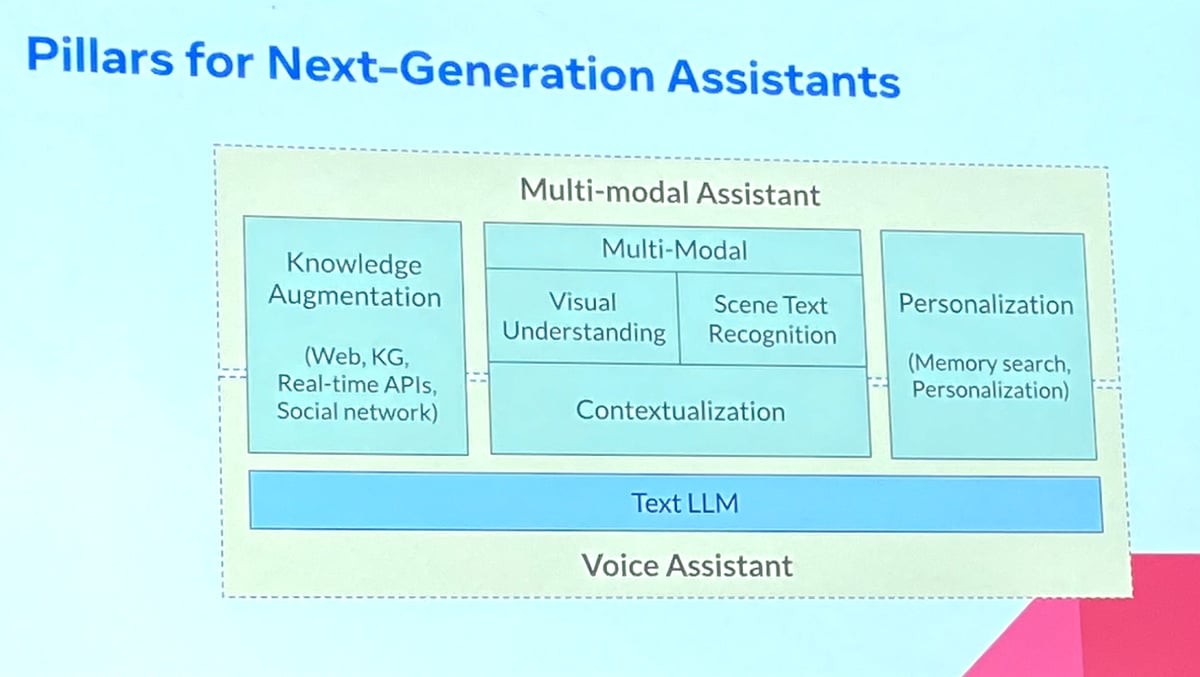

Meta: Luna DongMeta shared developments in intelligent personal assistants, expanding on existing LLM (Large Language Model) capabilities with federated RAG and knowledge graphs. Their vision for interactive, context-aware glasses and personal LLMs represents the next logical step in AI’s evolution. Yet again the importance of knowledge and knowledge graphs was indicated as a competitive advantage for an AI driven product.

It was interesting to see many other realistic applications of AI (ML in particular) across all the sectors:

- recommendation and ranking systems from Netflix’s and Google’s,

- advancements in trustworthy and personalized assistants,

- financial fraud detection from Feedzai,

- code generation from GitHub (not to be confused with software engineering),

- hardware building and optimization for silicon chips,

- cyber security use-cases for noise reduction and detecting the unknowns,

- genetics modeling and drug invention.

I am sure there were many other fascinating applications mentioned at the conference, I just could not be at the same time in all the venues at once.

Risks and Mitigation Strategies

Several risks emerged during the conference, particularly around the unchecked development of AI systems:

- not enough collaboration across academia, engineering and business resulting in building solutions focusing on happy paths and being successful in the laboratory environments only,

- focus on the naive ‘bigger is better’ with the assumption that there is at least a linear correlation between the volume of training data plus training GPUs and resulting AI’s performance. This risks missing out on the enormous opportunity in the details of Machine Learning and in high quality, specialized, clear training data,

- unclear Success Metrics with organizations fail to define success clearly, leading to models optimized for misplaced or artificial benchmarks. This can result in unsuccessful data projects or data programs that fail late.

- Ethics, Bias, openness, and Intellectual Property: Addressing fairness, bias, and data ownership is crucial to build up trust enabling further adoption and applications in the critical systems, being open with ‘intentions’ of an AI solution seems to be more important than ever before,

- Composite Data Pitfalls: Misusing data from random sources and ignoring actual context and operating domain can result in surprising and risky outcomes in production,

- LLMs Evaluating Other LLMs: Without external validation, this leads to reinforcing biases and flawed outputs leading to lost opportunities due to late use of the internal data, IP and domain expertise and internal knowledge. Can lead to delayed ROI and missed competitive advantage.

How to mitigate these risks. Well, a lot of this has been actually covered in my previous post here: https://medium.com/montrose-software/your-ai-project-done-done-done-1cb787a83bf8 but in short:

- collaboration across verticals and and empower multidisciplinary teams,

- Define clear success metrics embedded in the ultimate mission and goals,

- Leverage MLOps to ensure seamless integration and continuous improvement of AI systems,

- Align AI projects with the organization’s mission and goals, ensuring meaningful and relevant outcomes and Incorporating measurable feedback loops from the end users,

- employ empowered product teams to evaluate AI models based on real-world results and user feedback in a fast iterative fashion,

- Employ A/B testing and sampling techniques to overcome never-ending speculations and manage risks of uncertainty and unknown-unknowns,

- Implement robust risk management strategies, accounting for both the likelihood and impact of potential failures. Safe space for failure both mentally (with values, best-practices and open and honest communication) and physically (MLOps, DevOps, cloud-native approach, redundancy etc.)

- Measure: outcomes, costs and quality.

At Montrose Software, the above-mentioned principles are embedded in our values where we consider things as ‘done’ when the real outcome, not output, is delivered; with the intentional quality and within understood costs.

Whether it’s building efficient AI solutions, fine tuning AI with your internal knowledge and expertise, animating your R&D models stacking up on the researchers’ shelf, or aligning AI with your business goals with clear success benchmarks, reach out to us. We can help you bridge the gap between innovation and real-world impact, together.

Final Thoughts: Grounded Innovation and Cross-Vertical Collaboration

The key takeaway from KDD 2024 was that AI’s true power lies in its application to real-world problems. However, one recurring theme was the importance of cross-vertical collaboration. To fully leverage the potential of AI, it’s essential to break down the silos between academia, engineering, and business. By building bridges between these verticals, we ensure that cutting-edge academic models are tested against practical, real-world benchmarks and that engineering solutions are driven by business needs.

Collaboration across sectors and disciplines is no longer optional — it’s a requirement. Academic research must be grounded in reality, while business strategies need to be informed by the latest innovations. AI should be treated as a tool, one that solves specific problems and serves clear objectives.

At Montrose Software, we are committed to fostering this kind of collaboration. Whether you’re in academia looking to bring your research into a practical context, or a business seeking to integrate AI with clear goals and success metrics, we’re here to help. Let’s work together to break down barriers and create AI solutions that deliver real-world value.