The Microsoft Azure SRE Agent is a powerful step-but it’s just the beginning.

Last week at Microsoft Build, we saw something important. Not just a flashy demo or another AI integration-but a real shift in tone.

Microsoft introduced the Azure SRE Agent, an AI-powered assistant designed to automate incident response, dig into logs and metrics, and even open GitHub issues when something breaks.

Now, announcements like these come and go. But this one struck a chord-because it validated something many of us in SRE have felt for years:

Running production is still far too manual.

And while I genuinely appreciate that validation coming from one of the world’s largest cloud providers, it’s important to pause and look deeper.

Validation is not transformation. And shipping an AI assistant isn’t the same as rethinking how we operate under pressure.

The Hard Truth: LLMs Alone Won’t Make SRE Better

There’s no denying that LLMs are impressive. They can summarize pages of telemetry, correlate log lines, and generate helpful descriptions faster than a human could ever hope to.

But anyone who’s been paged at 2am knows: incidents don’t just need information-they need clarity.

What changed?

Is this alert noise, or is it signaling real impact?

Is the failure isolated, or is it spreading?

And-most importantly-does this matter to the user?

These are the kinds of questions engineers ask during high-stakes moments. And they require more than a summary-they demand reasoning, judgment, and context.

LLMs are great at predicting the next word in a sentence. But incidents aren’t solved by probability-they’re solved by understanding causality.

An AI assistant might surface a spike in error rate. But will it know that the spike coincided with a canary deployment in a single region affecting only enterprise customers behind a feature flag?

That’s not pattern-matching. That’s systems thinking.

And we haven’t trained LLMs to do that-yet.

Thanks for reading Reliability Engineering! Subscribe for free to receive new posts and support my work.

Real SRE Intelligence Can’t Live in a Walled Garden

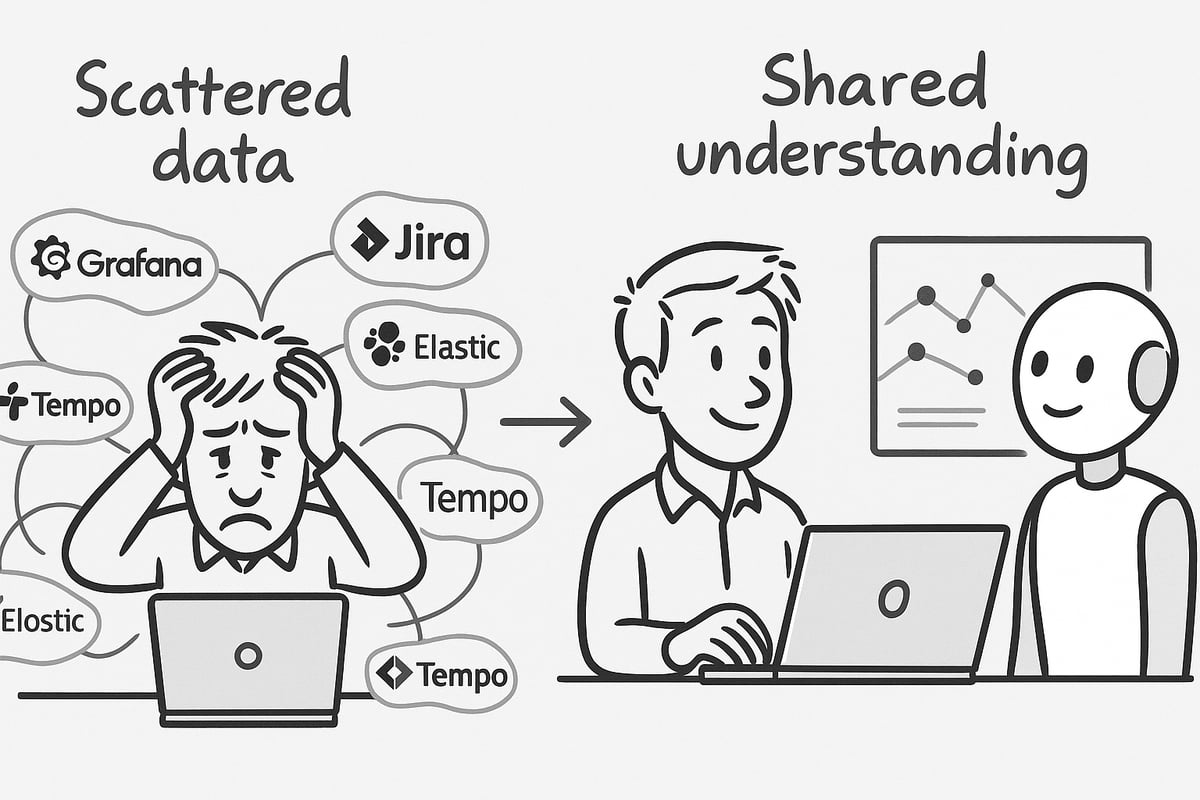

Let’s be honest: most teams don’t live inside a single platform.And yet, most AI tooling does.

The Azure SRE Agent looks promising-but it’s designed for Azure. That’s fine if your stack is all-in on Microsoft. But most modern systems are not.

In practice:

- Metrics live in Prometheus

- Logs live in Elastic or Datadog

- Traces are scattered across Tempo, Dynatrace, or maybe Jaeger

- Tickets are in Jira, alerts ping Slack, and the real context lives in someone’s Notion doc

If your AI assistant can’t move across that sprawl, it’s not really an assistant. It’s another tool with limited reach-and limited value.

SREs don’t need more tooling.We need systems that see the whole system-and help us connect the dots.

What Will Separate Real AI for SRE from Hype?

Let’s cut through the buzz and focus on what will actually matter:

- Causal reasoning, not just correlation.Knowing why something failed, not just what happened around the same time.

- Multi-context awareness.Can it connect changes in deployment history to customer complaints to spikes in latency?

- Cross-stack observability.It must look beyond a single vendor’s dashboard to see the full picture across clouds, tools, and formats.

- Understanding intent and risk.It’s not enough to say “this pod crashed.” A meaningful assistant must understand who it impacts and why it matters—especially in multi-tier SLA environments.

This isn’t theoretical. It’s how real-world incidents work. The closer your AI assistant gets to those layers of nuance, the more trust it will earn.

Thanks for reading Reliability Engineering! Subscribe for free to receive new posts and support my work.

AI Will Change How We Operate-but Not Without Us

There’s a temptation to believe that if we throw enough machine learning at the problem, the pager will stop buzzing.

It won’t. Not for a while.

The role of AI in SRE isn’t to replace operators. It’s to give them room to think.

Room to breathe when the alert storm hits.Room to ask better questions, faster.Room to focus on the signal, not dig through the noise.

True transformation will come when AI stops trying to act like a chatbot-and starts behaving like a teammate. One that listens, reasons, learns, and respects the complexity of real systems.

And maybe even one that says:

"Hey, this might look fine on the dashboard, but I’ve seen this pattern before-it tends to break badly."

The Future of SRE Is Multi-Agent and Human-Led

I don’t believe in a single AI to rule them all.I believe in a network of intelligent systems, built to assist, not replace.

The future we’re building will have:

- Causal AI that identifies root causes before they spread.

- Intent-aware agents that understand business impact, user tiers, and SLO risk.

- Collaborative workflows that bring human empathy and machine insight together.

Because the most important skill in incident response is not code-it’s calm.And the most valuable system isn’t the one that works perfectly-it’s the one you understand well enough to recover gracefully.

So What Now?

Microsoft’s announcement is a milestone.But the real shift in AI for SRE will happen beyond the keynote stage.

It will happen inside teams like yours-running hybrid stacks, managing real users, and firefighting with more tools than context.

If you’re evaluating AI for your stack, ask yourself:

- Is it just summarizing telemetry?

- Or is it starting to think like someone you'd want on-call next to you?

We have a chance to build something better. Let’s not waste it.

Follow me on

Contact me!

- I advise startups, coach leaders and help in lots of ways. Also if you want to start adopting a culture of reliability and AI, feel free to Contact me.